By Susan M. McMillan, Ph.D.

I’ve had multiple conversations with teachers in which their stories start, “I’m not an assessment expert.” Perhaps they are not trained measurement scientists, but teachers are experts in the content they provide, and they are experts in what happens in their classrooms. This blog offers a knowledge base to educators who want to become experts at crafting their own classroom assessments, either by compiling questions from item banks, or writing their own items.

Step 1: Define the Relevant Content

High-quality assessments begin with detailed learning objectives that state what students should know or be able to do as a result of instruction. Start with the objectives because classroom assessment results should “accurately represent the degree to which a student has achieved an intended learning outcome.”1 Content that is irrelevant to the objectives identified for a given test or quiz should not be included because it adds to the testing and grading burden without contributing information about the learning goals.

For short formative assessments—tests or quizzes used to guide instruction—spending time evaluating detailed learning objectives may feel like overkill. Yet, the objectives keep every assessment, no matter how short or long, “on track” to provide educationally useful information. Assessment items in all content areas—physical education and performance arts, as well as reading, math, science, social studies, and so on—must be aligned with learning goals for assessments to serve their intended purpose.

Step 2: Appreciate the Pros and Cons of Different Item Types

Educators may feel overwhelmed with options for types of items to include in their assessments when considering both online and paper/pencil assessment formats. Each type of item has advantages and disadvantages for classroom use, and most types of items fit into two broad categories: selected response and constructed response.

Selected-response items ask students to choose a correct response from a set of given options; examples include matching, true/false, and multiple-choice items. This type of item is also called “objective” because scoring the responses does not require judgement—either the response matches the answer key, or it does not.

Students can respond to many selected-response items at one sitting which can contribute to score reliability (consistent results), although the item quality is as important for reliability as the number of items2. And, selected response items are easy to grade, either by hand or by machine. However, the items are difficult and time consuming to write, are criticized because they offer students opportunities to be successful at guessing, and they are sometimes associated with assessment of lower Levels in Webb’s Depth of Knowledge (DOK) categories.3

Constructed-response items ask students to produce some writing or other demonstration of knowledge. Sometimes called open-ended items, examples include extended essay, problem solving, and performance tasks. This type of item is also called “subjective” because scoring against a set of criteria or a rubric involves judgement about how well the question has been answered or the task completed.

Students can provide detailed answers or solutions to constructed-response items, but grading the responses is labor intensive and subject to variation. Constructed-response items can be easier for teachers to write but guessing is not eliminated; it looks like “bluffing” in student responses. And, because students generally have time to respond to fewer prompts, it can be more difficult to get a consistent measure of student learning (though including more items does not automatically increase the reliability of the score).

Technology-enhanced items (TEI), can be either selected-response or constructed-response items and are usually associated with assessments taken on the computer, but can sometimes be converted for use with paper/pencil tests. For example, choosing “hot” text in an online assessment format is a selected-response item that would be similar to students underlining or circling the text on a paper and pencil test. Matching and sequencing are also selected-response items that can be administered on paper or online. Online assessment items that ask students to plot points and lines on graphs or generate equations are examples of constructed-response items that can also be completed using paper. Computer simulations offer online versions of performance tasks.

Step 3: Match Learning Objectives with Item Types

The decision about what types of items to include needs to combine practical concerns—such as how much time the assessment will take and how much effort grading will require—with a focus on the learning objectives. Clear learning objectives often include verbs that describe how students should be able to demonstrate learning, and those verbs provide clues about what type of item will best assess learning4.

When assessment items match well with learning objectives, both in content and in what they ask students to do, they are said to be “aligned”. Well-aligned items contribute to score validity (testing what you intend to test) because they ask students to demonstrate what they know in a way that is consistent with the objective5.

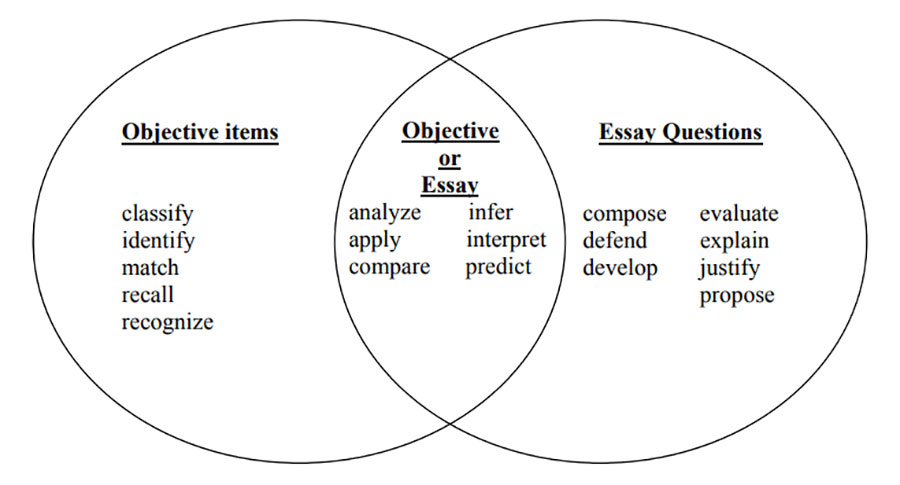

Constructed-response items are good choices when the objectives contain verbs such as “explain,” “create” and “demonstrate”. Objectives that include verbs such as “identify,” “list” and “classify” are consistent with selected-response items. Sometimes either selected or construct-response items would be appropriate, for example when the objectives include verbs such as “apply” and “understand”.

One widely cited guide to writing assessment items provides the following Venn diagram with examples for matching the verbs in learning goals with “objective” (selected-response) and “essay” (constructed-response) items.

Figure 1: Directive Verbs and Item Types 6

There is danger, however, in automatically equating key verbs with item types, and this figure should be taken as an illustration. Items of any type must be structured in a way that elicits a quality response within the context of the learning objectives. Similarly, educator and blogger Robert Kaplinsky has pointed out that there is no direct translation between specific verbs and questions that assess student learning at particular DOK Levels7.

Step 4: Plan the Assessment

An assessment “blueprint” is one method for planning items and ensuring that they are covering the intended learning objectives. Blueprints are organized as a matrix with learning goals as column headers and type of item as row descriptors.

Table 1 provides an example blueprint; the numbers in the cells represent the number of items on the test. The blueprint includes only multiple-choice items to assess Objective 1, while Objective 2 is tested with multiple-choice items and one short essay, and Objective 3 is covered by two multiple-choice items and one performance task. Educators can modify this example by adding their own learning objectives, adding or deleting item types as needed, and determining how many items will be needed for each objective.

Table 1: Example Assessment Blueprint

| Objective 1 | Objective 2 | Objective 3 | |

|---|---|---|---|

| Multiple Choice | 5 | 3 | 2 |

| Short Essay | 1 | ||

| Performance Task | 1 |

The number and type of items needed to assess students for each objective are not set by formulas or checklists. Each objective should be covered with enough items to get a consistent (reliable) estimate of student knowledge, and that is determined by both the number and quality of the items. Items that are well-written, whether multiple choice or constructed response, reduce the amount of error associated with the measurement and provide more reliable information about what students know and can do.

Teachers must use their judgement and classroom experience to decide what mix of item types will provide the best picture of student learning in their classrooms. Educators can build assessment expertise by starting with clear learning objectives and working to align the quiz or test items, either pulled from item banks or written locally, with those objectives.

Footnotes

1) https://testing.byu.edu/handbooks/WritingEffectiveEssayQuestions.pdf, p.15

2) https://www.rasch.org/mra/mra-02-09.htm

3) For a description and discussion of Webb’s Depth of Knowledge Levels, see https://www.edutopia.org/blog/webbs-depth-knowledge-increase-rigor-gerald-aungst.

4) https://testing.byu.edu/handbooks/WritingEffectiveEssayQuestions.pdf, p.15; 17-18.

5) https://cft.vanderbilt.edu/guides-sub-pages/writing-good-multiple-choice-test-questions/

6) https://testing.byu.edu/handbooks/WritingEffectiveEssayQuestions.pdf, p.18.

7) http://robertkaplinsky.com/is-depth-of-knowledge-complex-or-complicated/